Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

François Chollet

Co-founder @ndea. Co-founder @arcprize. Creator of Keras and ARC-AGI. Author of 'Deep Learning with Python'.

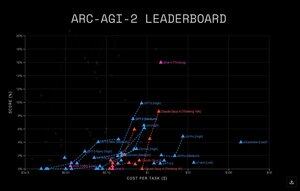

Grok 4 is still state-of-the-art on ARC-AGI-2 among frontier models.

15.9% for Grok 4 vs 9.9% for GPT-5.

ARC Prize8.8. klo 01.29

GPT-5 on ARC-AGI Semi Private Eval

GPT-5

* ARC-AGI-1: 65.7%, $0.51/task

* ARC-AGI-2: 9.9%, $0.73/task

GPT-5 Mini

* ARC-AGI-1: 54.3%, $0.12/task

* ARC-AGI-2: 4.4%, $0.20/task

GPT-5 Nano

* ARC-AGI-1: 16.5%, $0.03/task

* ARC-AGI-2: 2.5%, $0.03/task

514

GPT-5 results on ARC-AGI 1 & 2!

Top line:

65.7% on ARC-AGI-1

9.9% on ARC-AGI-2

ARC Prize8.8. klo 01.29

GPT-5 on ARC-AGI Semi Private Eval

GPT-5

* ARC-AGI-1: 65.7%, $0.51/task

* ARC-AGI-2: 9.9%, $0.73/task

GPT-5 Mini

* ARC-AGI-1: 54.3%, $0.12/task

* ARC-AGI-2: 4.4%, $0.20/task

GPT-5 Nano

* ARC-AGI-1: 16.5%, $0.03/task

* ARC-AGI-2: 2.5%, $0.03/task

38,71K

The paper "Hierarchical Reasoning Models" has been making the rounds lately, collecting tens of thousands of likes on Twitter across dozens of semi-viral threads, which is quite unusual for a research paper.

The paper claims 40.3% accuracy on ARC-AGI-1 with a tiny model (27M parameters) trained from scratch without any external training data -- if real, this would represent a major reasoning breakthrough.

I just did a deep dive on the paper and codebase...

It's good read, detailed yet easy to follow. I think the ideas presented are quite interesting and the architecture is likely valuable.

The concept reminds me of many different ideas I encountered during the "golden age" of DL architecture research, circa 2016-2018. This type of research hasn't been popular for a while, so it's nice to see renewed interest in alternative architectures.

However, the experimental setup appears to be critically flawed, which means that we currently have no empirical signal (at least from ARC-AGI) as to whether the architecture is actually helpful or not.

The ARC-AGI-1 experiment is doing the following, based on my read of the data preparation code:

1. Train on 876,404 tasks, which are augmentation-generated variants of 960 original tasks:

... 400 from ARC-AGI-1/train

... 400 from ARC-AGI-1/eval

... 160 from ConceptARC

2. Test on 400 tasks (ARC-AGI-1/eval), by augmenting each task into ~1000 variants (in reality it's only 368,151 in total due to idiosyncrasies of the augmentation process), producing a prediction for each variant, and reducing predictions to N=2 via majority voting.

In short: they're training on the test data.

You might ask, wait, why is the accuracy 40% then, rather than 100%? is the model severely underfit?

That's because the training data and the test data represent the same original tasks *in different variations*. Data augmentation is applied independently to the eval tasks in the training data and the eval tasks in the test data.

So what the experiment is measuring, roughly, is how the model manages to generalize to procedurally generated variants of the same tasks (i.e. whether the model can learn to reverse a fixed set of static grid transformations).

So -- don't get too excited just yet. But I do think this kind of architecture research is valuable (when accompanied by a proper empirical validation signal) and that the HRM idea is very interesting.

Also, to be clear, I don't think the authors had any intent mislead and hide the experimental issue -- they probably didn't realize what their training setup actually meant.

19,73K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin